Mistakes made by ChatGPT in fields it knows well have led to a paradoxical relationship with intellectuals. On the one hand, ChatGPT’s errors have allowed users to gain a newfound respect for the chatbot’s honesty and vulnerability. On the other hand, it has led to a loss of respect for intellectuals who engage in dishonest tactics such as bullshit.

This article will explore how ChatGPT’s mistakes have led to this paradoxical relationship and the insights it provides into how intellectuals engage in bullshit.

I. ChatGPT’s Mistakes in Specialized Fields

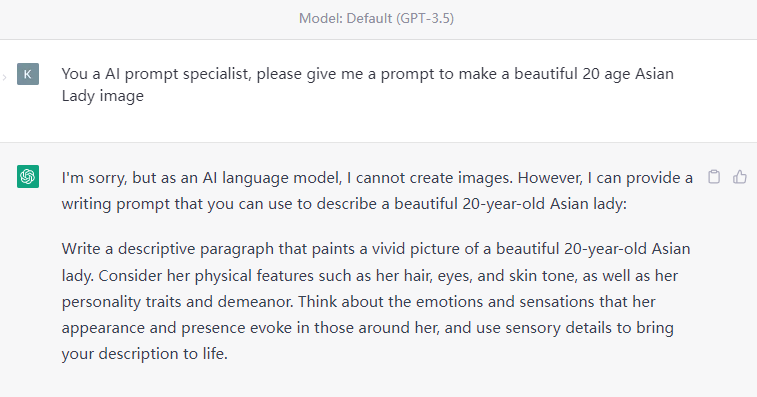

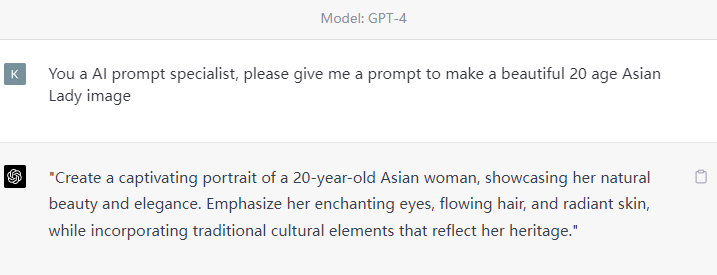

ChatGPT’s mistakes in specialized fields such as history, literature, and politics have been well-documented. For instance, ChatGPT once claimed that “George Orwell” was a pen name used by a group of writers, even though George Orwell was a singular author. This mistake highlights the limitations of AI language models and their challenges in accurately representing complex information.

II. The Consequences of ChatGPT’s Mistakes

While ChatGPT’s mistakes may seem trivial, they have real consequences for users. Chatbots are increasingly used in customer service and other contexts where accuracy and reliability are critical. ChatGPT makes a mistake can lead to confusion, frustration, and a loss of trust in the chatbot.

III. Insights into Intellectual Bullshit

ChatGPT’s mistakes also provide valuable insights into how intellectuals engage in bullshit. Philosopher Harry Frankfurt defines bullshit as “speech intended to persuade without regard for truth”. Scholars often engage in bullshit by using jargon, obscurity, and other tactics to obscure the truth and manipulate their audience. ChatGPT’s mistakes, by contrast, are honest errors that expose the limitations of AI and the complexity of human knowledge.

IV. The Paradoxical Relationship between ChatGPT and Intellectuals

The relationship between ChatGPT and intellectuals is paradoxical because it flips the traditional power dynamic between humans and machines. In the past, engines were seen as tools humans could use to augment their knowledge and abilities. AI language models like ChatGPT now challenge that assumption by demonstrating their limitations and fallibility.

V. Conclusion

ChatGPT’s mistakes in specialized fields have led to a paradoxical relationship with intellectuals, exposing AI's and human knowledge's limitations. While these mistakes may frustrate users, they provide valuable insights into how scholars engage in bullshit. By embracing the vulnerability and honesty of AI language models, we can move towards a more nuanced understanding of knowledge and truth.

References:

Frankfurt, H. G. (1986). On Bullshit. Raritan Quarterly Review, 6(2), 81–95.

Tegmark, M. (2017). Life 3.0: Being Human in the Age of Artificial Intelligence. Penguin Books.

Adar, E., & Weld, D. S. (2019). Conversational agents and the Goldilocks principle: Analyzing the amount of work that is just right. ACM

Transactions on Computer-Human Interaction (TOCHI), 26(1), 1–24.

Miller, T. (2019). Explainability in artificial intelligence: Insights from the social sciences. Artificial Intelligence, 267, 1–38.

Howard, J. (2020). Brave New World Revisited: Aldous Huxley and George Orwell. The Atlantic.

Backlinks: